Many organizations have a requirement to hold some data for longer periods of time. This may be for compliance reasons or simply for long-term record retention.

Although backup solutions do offer long-term retention options, for archival purposes Azure Storage offers a specific archiving tier. We covered the storage tiers in Chapter 9, Exploring Storage Solutions, but to recap, you can configure a storage account as Hot, Cool, or Archive:

- Hot is used for data that is accessed frequently – this is what you would normally choose for a day-to-day storage solution.

- Cool is for data that is accessed infrequently. It is lower-cost than the Hot tier, but it has slightly lower availability.

- Archive storage is design for data that will be kept long-term, but that will be rarely, if ever, accessed once written to. Archive storage is the cheapest option for storage; however, there are costs for reading data from it.

When creating a storage account, you set the default access tier, but you can only choose between hot and cool. After creation, an individual blob can then have its properties modified to move to another tier, including Archive.

Important Note

Only blob storage and General Purpose v2 (GPv2) storage accounts support tiering, and Microsoft recommends using GPv2 over blob accounts as they offer more features for a similar cost.

An important consideration for Archive-tier storage is that it is offline storage. This means once data is set as to be in the Archive tier, it is considered offline. This has the effect that the data can no longer be directly read or modified; if you do need to access the data, you must first rehydrate it.

When rehydrating data, you can either change the blob tier back to hot or cool, or you can copy the data to a hot or cool tier. Note that this process can take hours to complete. You can set a rehydrate priority on a blob by setting x-ms-rehydrate-priority when changing the blob tier back to hot or cool, or copying it.

Standard priority is the lowest cost but has an service-level agreement (SLA) of 15 hours, whereas High priority can be performed in under an hour but is more expensive. However, that timeline is not under SLA; all that is guaranteed is the operation will have priority over a standard request.

Although you can manually change the tiering of a blob, this might not always be practical, unless you know the data must be archived as soon as you write it.

Sometimes you may only want to archive data if it hasn’t been accessed for a period of time. In these scenarios, you can set up a life cycle management rule to automatically move data.

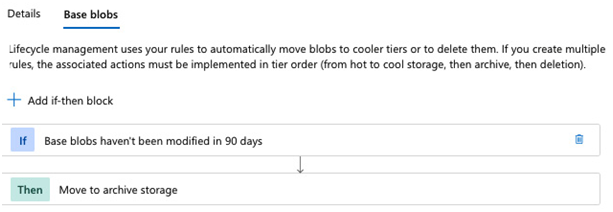

You can create multiple rules in a storage account’s life cycle management blade, and for each rule, you can define how many days since a blob has been modified and whether to move it to a Cool or Archive tier, or even delete the file.

The following screenshot shows a simple rule that moves data to the Archive tier if it has not been modified for 90 days:

Figure 16.6 – Example life cycle management rule

By building multiple rules, you can set up complex workflows. For example, you could create multiple rules that move data to the Cool tier after 30 days, then to the Archive tier after 90 days, and then to finally delete the data after a year.

The ability to delete data after a set period can be crucial as many countries have strict rules on how long personal data can be kept for.

Through a combination of a well-defined backup solution and archiving policy, you can ensure your applications make the best use of storage based on costs and access requirements.

Summary

In this chapter, we looked at how an organization’s DR requirements can differ and how the different Azure Backup mechanisms can be used to achieve varying levels of RTO, RPO, and data retention requirements.

We looked at how Azure Backup provides a scalable solution for backing up standard VMs as well as SQL on VMs, PostgreSQL on VMs, and SAP HANA on VMs.

Next, we looked at native database backup solutions and saw how Azure SQL and Azure Cosmos DB automatically perform backups for you, but how you can tweak them to your own requirements.

Finally, we looked at data archiving solutions and how we can automate the movement of data between the Hot, Cool, and Archive tiers, or even delete it through the use of life cycle management rules, which are a core feature of storage accounts.

In the next chapter, we continue our operations theme by looking at how we can automate the deployment of services using Azure DevOps.

Exam scenario

MegaCorp Inc. hosts an application that stores and manages customer calls in Azure.

The system comprises a web application running on a VM, with an Azure SQL database for the customer records. The records themselves are updated regularly.

As an internal application, the system is not considered critical, and it has been built in a single Azure region. In the event of an entire region failure, management would like to be able to fail over to a paired region; a downtime of 24 hours would be acceptable, but any data loss needs to minimal.

The management team would like to ensure database backups are taken and kept every month and retained for a year in case they ever need to examine historical data that could have been overwritten.

The VM does not contain any data, just the web application, and therefore only changes as new versions are rolled out.

Design a solution that will allow the business to recover in the event of an entire region outage.

Leave a Reply